diff --git a/docs/hub/models-adding-libraries.md b/docs/hub/models-adding-libraries.md

index 39d39b751..2351757c1 100644

--- a/docs/hub/models-adding-libraries.md

+++ b/docs/hub/models-adding-libraries.md

@@ -1,13 +1,13 @@

# Integrate your library with the Hub

-The Hugging Face Hub aims to facilitate sharing machine learning models, checkpoints, and artifacts. This endeavor includes integrating the Hub into many of the amazing third-party libraries in the community. Some of the ones already integrated include [spaCy](https://spacy.io/usage/projects#huggingface_hub), [AllenNLP](https://allennlp.org/), and [timm](https://rwightman.github.io/pytorch-image-models/), among many others. Integration means users can download and upload files to the Hub directly from your library. We hope you will integrate your library and join us in democratizing artificial intelligence for everyone.

+The Hugging Face Hub aims to facilitate sharing machine learning models, checkpoints, and artifacts. This endeavor includes integrating the Hub into many of the amazing third-party libraries in the community. Some of the ones already integrated include [spaCy](https://spacy.io/usage/projects#huggingface_hub), [Sentence Transformers](https://sbert.net/), [OpenCLIP](https://github.com/mlfoundations/open_clip), and [timm](https://huggingface.co/docs/timm/index), among many others. Integration means users can download and upload files to the Hub directly from your library. We hope you will integrate your library and join us in democratizing artificial intelligence for everyone.

Integrating the Hub with your library provides many benefits, including:

- Free model hosting for you and your users.

- Built-in file versioning - even for huge files - made possible by [Git-LFS](https://git-lfs.github.com/).

-- All public models are powered by the [Inference API](https://huggingface.co/docs/api-inference/index).

-- In-browser widgets allow users to interact with your hosted models directly.

+- Community features (discussions, pull requests, likes).

+- Usage metrics for all models ran with your library.

This tutorial will help you integrate the Hub into your library so your users can benefit from all the features offered by the Hub.

@@ -15,107 +15,84 @@ Before you begin, we recommend you create a [Hugging Face account](https://huggi

If you need help with the integration, feel free to open an [issue](https://github.com/huggingface/huggingface_hub/issues/new/choose), and we would be more than happy to help you.

-## Installation

+## Implementation

-1. Install the `huggingface_hub` library with pip in your environment:

+Implementing an integration of a library with the Hub often means providing built-in methods to load models from the Hub and allow users to push new models to the Hub. This section will cover the basics of how to do that using the `huggingface_hub` library. For more in-depth guidance, check out [this guide](https://huggingface.co/docs/huggingface_hub/guides/integrations).

- ```bash

- python -m pip install huggingface_hub

- ```

+### Installation

-2. Once you have successfully installed the `huggingface_hub` library, log in to your Hugging Face account:

+To integrate your library with the Hub, you will need to add `huggingface_hub` library as a dependency:

- ```bash

- huggingface-cli login

- ```

+```bash

+pip install huggingface_hub

+```

- ```bash

- _| _| _| _| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _|_|_|_| _|_| _|_|_| _|_|_|_|

- _| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

- _|_|_|_| _| _| _| _|_| _| _|_| _| _| _| _| _| _|_| _|_|_| _|_|_|_| _| _|_|_|

- _| _| _| _| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

- _| _| _|_| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _| _| _| _|_|_| _|_|_|_|

+For more details about `huggingface_hub` installation, check out [this guide](https://huggingface.co/docs/huggingface_hub/installation).

-

- Username:

- Password:

- ```

+

-3. Alternatively, if you prefer working from a Jupyter or Colaboratory notebook, login with `notebook_login`:

+In this guide, we will focus on Python libraries. If you've implemented your library in JavaScript, you can use [`@huggingface/hub`](https://www.npmjs.com/package/@huggingface/hub) instead. The rest of the logic (i.e. hosting files, code samples, etc.) does not depend on the code language.

- ```python

- >>> from huggingface_hub import notebook_login

- >>> notebook_login()

- ```

+```

+npm add @huggingface/hub

+```

- `notebook_login` will launch a widget in your notebook from which you can enter your Hugging Face credentials.

+

-## Download files from the Hub

+Users will need to authenticate once they have successfully installed the `huggingface_hub` library. The easiest way to authenticate is to save the token on the machine. Users can do that from the terminal using the `login()` command:

-Integration allows users to download your hosted files directly from the Hub using your library.

+```

+huggingface-cli login

+```

-Use the `hf_hub_download` function to retrieve a URL and download files from your repository. Downloaded files are stored in your cache: `~/.cache/huggingface/hub`. You don't have to re-download the file the next time you use it, and for larger files, this can save a lot of time. Furthermore, if the repository is updated with a new version of the file, `huggingface_hub` will automatically download the latest version and store it in the cache for you. Users don't have to worry about updating their files.

+The command tells them if they are already logged in and prompts them for their token. The token is then validated and saved in their `HF_HOME` directory (defaults to `~/.cache/huggingface/token`). Any script or library interacting with the Hub will use this token when sending requests.

-For example, download the `config.json` file from the [lysandre/arxiv-nlp](https://huggingface.co/lysandre/arxiv-nlp) repository:

+Alternatively, users can programmatically login using `login()` in a notebook or a script:

-```python

->>> from huggingface_hub import hf_hub_download

->>> hf_hub_download(repo_id="lysandre/arxiv-nlp", filename="config.json")

+```py

+from huggingface_hub import login

+login()

```

-Download a specific version of the file by specifying the `revision` parameter. The `revision` parameter can be a branch name, tag, or commit hash.

+Authentication is optional when downloading files from public repos on the Hub.

-The commit hash must be a full-length hash instead of the shorter 7-character commit hash:

+### Download files from the Hub

-```python

->>> from huggingface_hub import hf_hub_download

->>> hf_hub_download(repo_id="lysandre/arxiv-nlp", filename="config.json", revision="877b84a8f93f2d619faa2a6e514a32beef88ab0a")

-```

+Integrations allow users to download a model from the Hub and instantiate it directly from your library. This is often made possible by providing a method (usually called `from_pretrained` or `load_from_hf`) that has to be specific to your library. To instantiate a model from the Hub, your library has to:

+- download files from the Hub. This is what we will discuss now.

+- instantiate the Python model from these files.

+

+Use the [`hf_hub_download`](https://huggingface.co/docs/huggingface_hub/main/en/package_reference/file_download#huggingface_hub.hf_hub_download) method to download files from a repository on the Hub. Downloaded files are stored in the cache: `~/.cache/huggingface/hub`. Users won't have to re-download the file the next time they use it, which saves a lot of time for large files. Furthermore, if the repository is updated with a new version of the file, `huggingface_hub` will automatically download the latest version and store it in the cache. Users don't have to worry about updating their files manually.

-Use the `cache_dir` parameter to change where a file is stored:

+For example, download the `config.json` file from the [lysandre/arxiv-nlp](https://huggingface.co/lysandre/arxiv-nlp) repository:

```python

>>> from huggingface_hub import hf_hub_download

->>> hf_hub_download(repo_id="lysandre/arxiv-nlp", filename="config.json", cache_dir="/home/lysandre/test")

+>>> config_path = hf_hub_download(repo_id="lysandre/arxiv-nlp", filename="config.json")

+>>> config_path

+'/home/lysandre/.cache/huggingface/hub/models--lysandre--arxiv-nlp/snapshots/894a9adde21d9a3e3843e6d5aeaaf01875c7fade/config.json'

```

-### Code sample

+`config_path` now contains a path to the downloaded file. You are guaranteed that the file exists and is up-to-date.

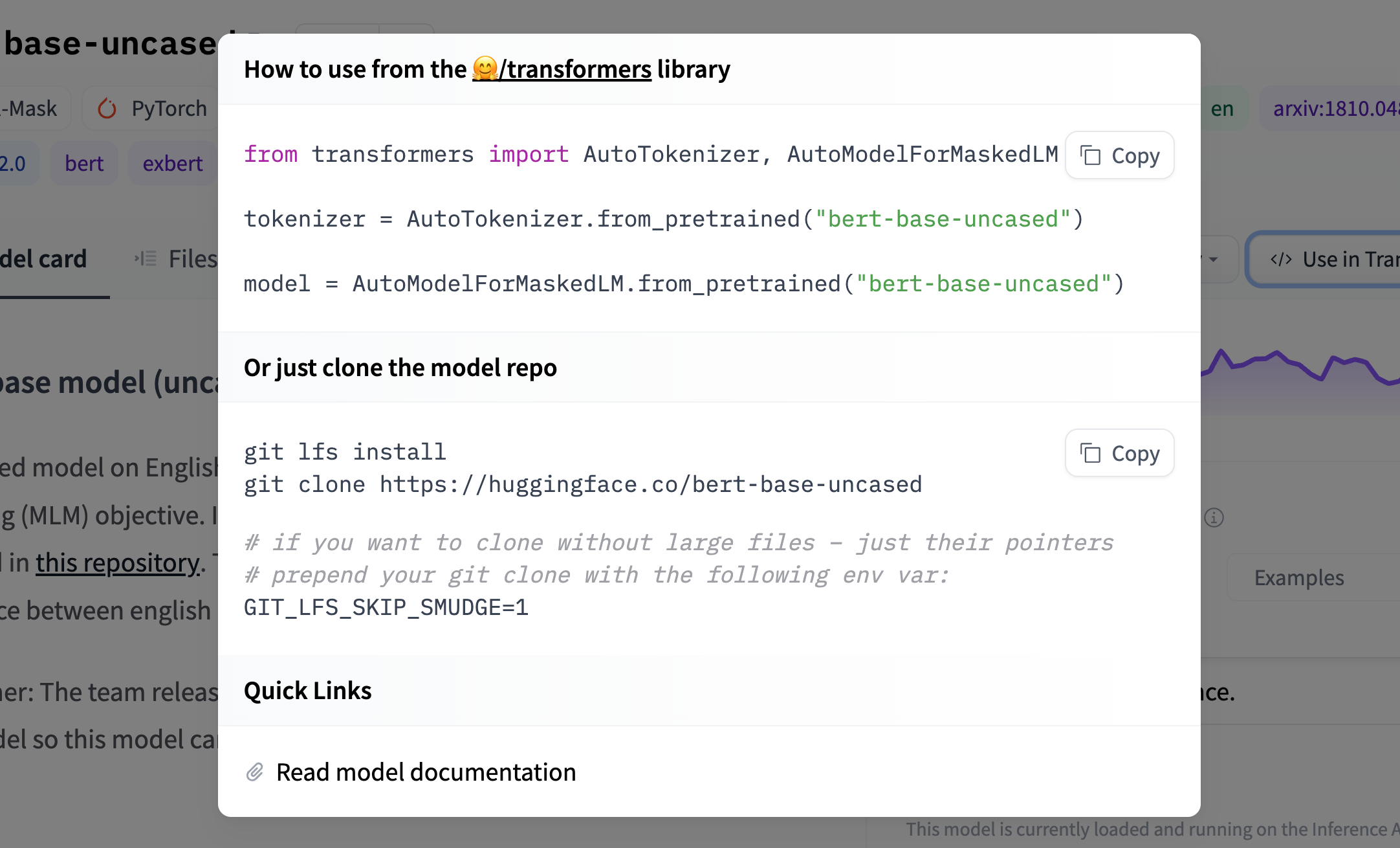

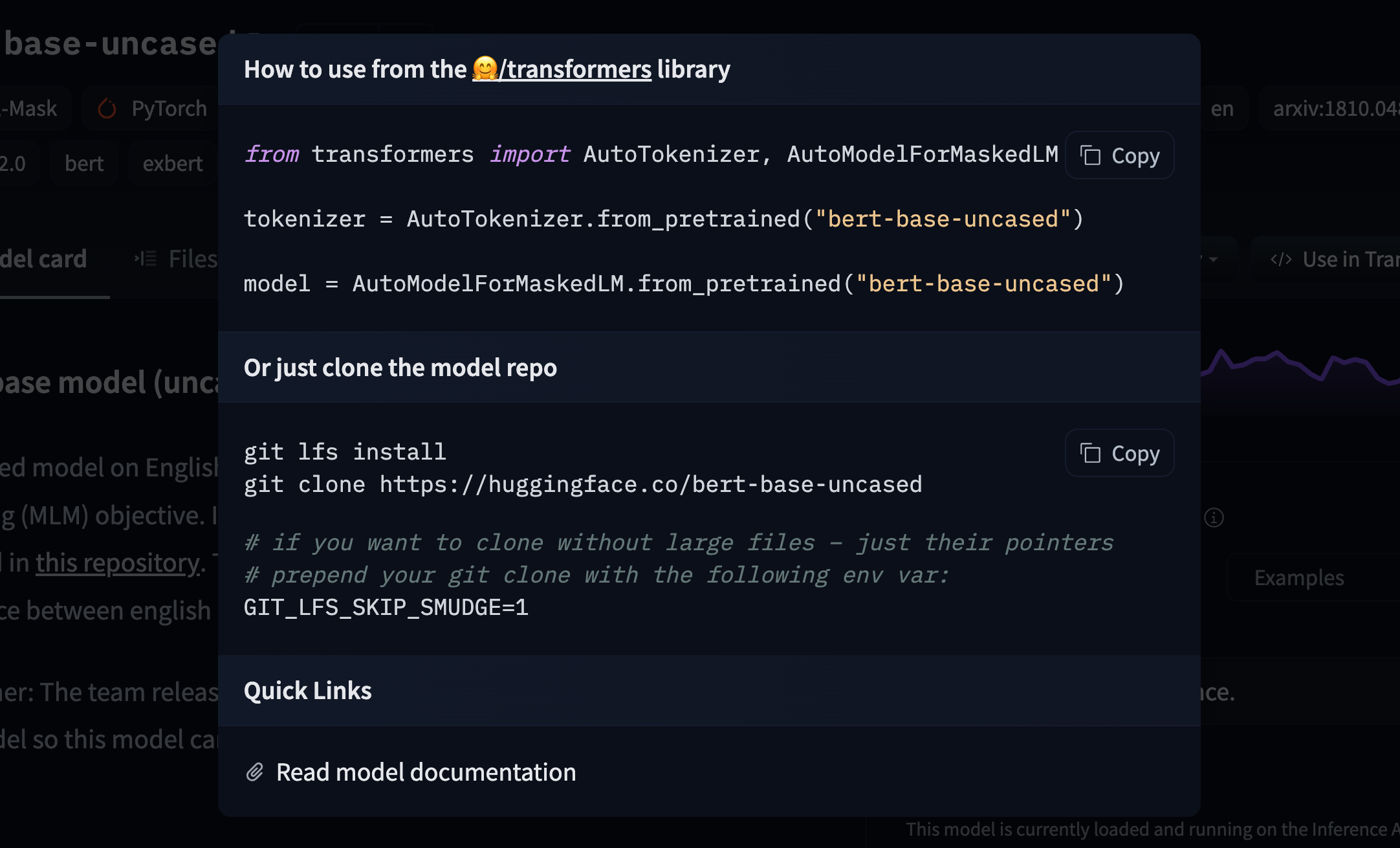

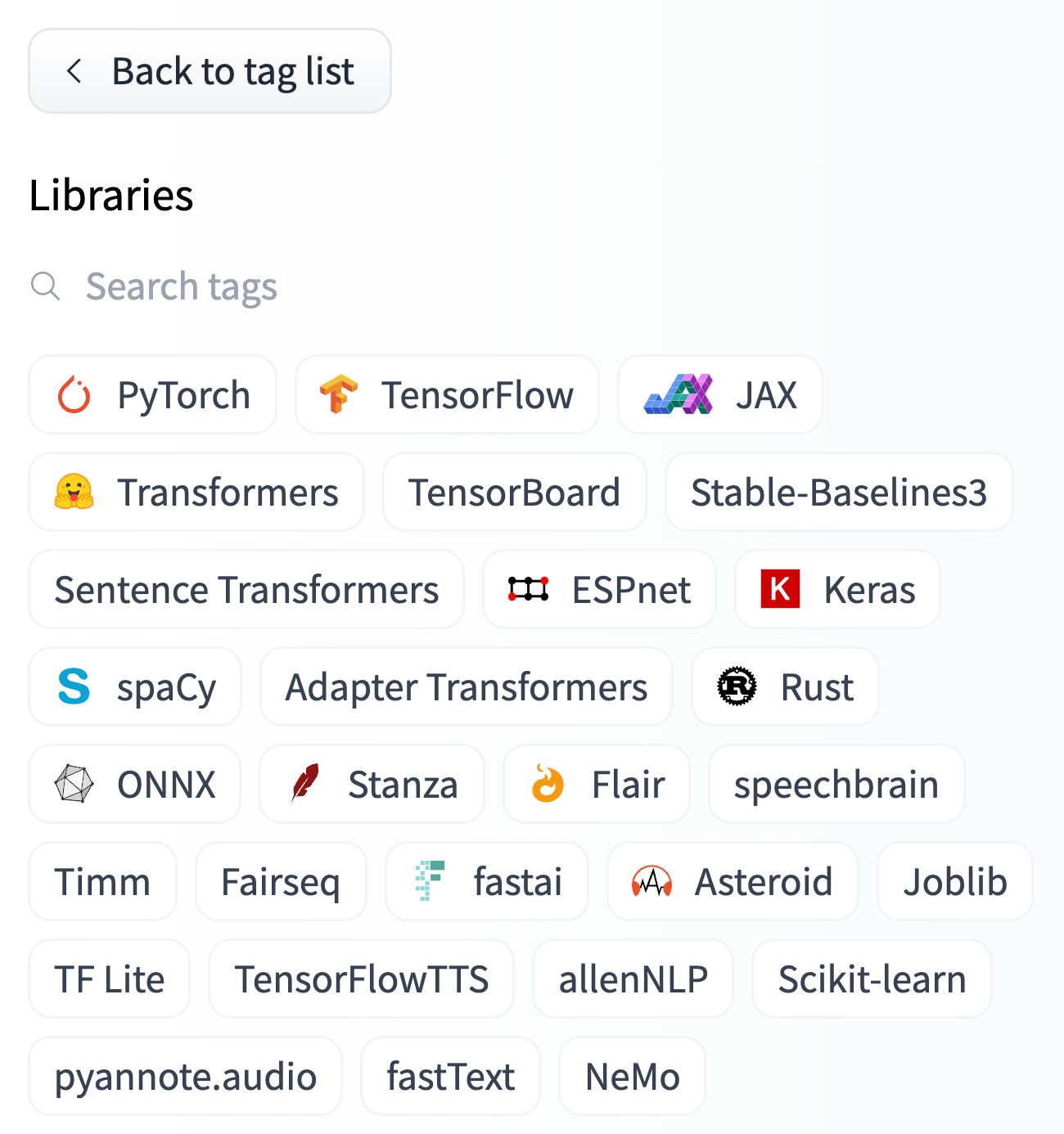

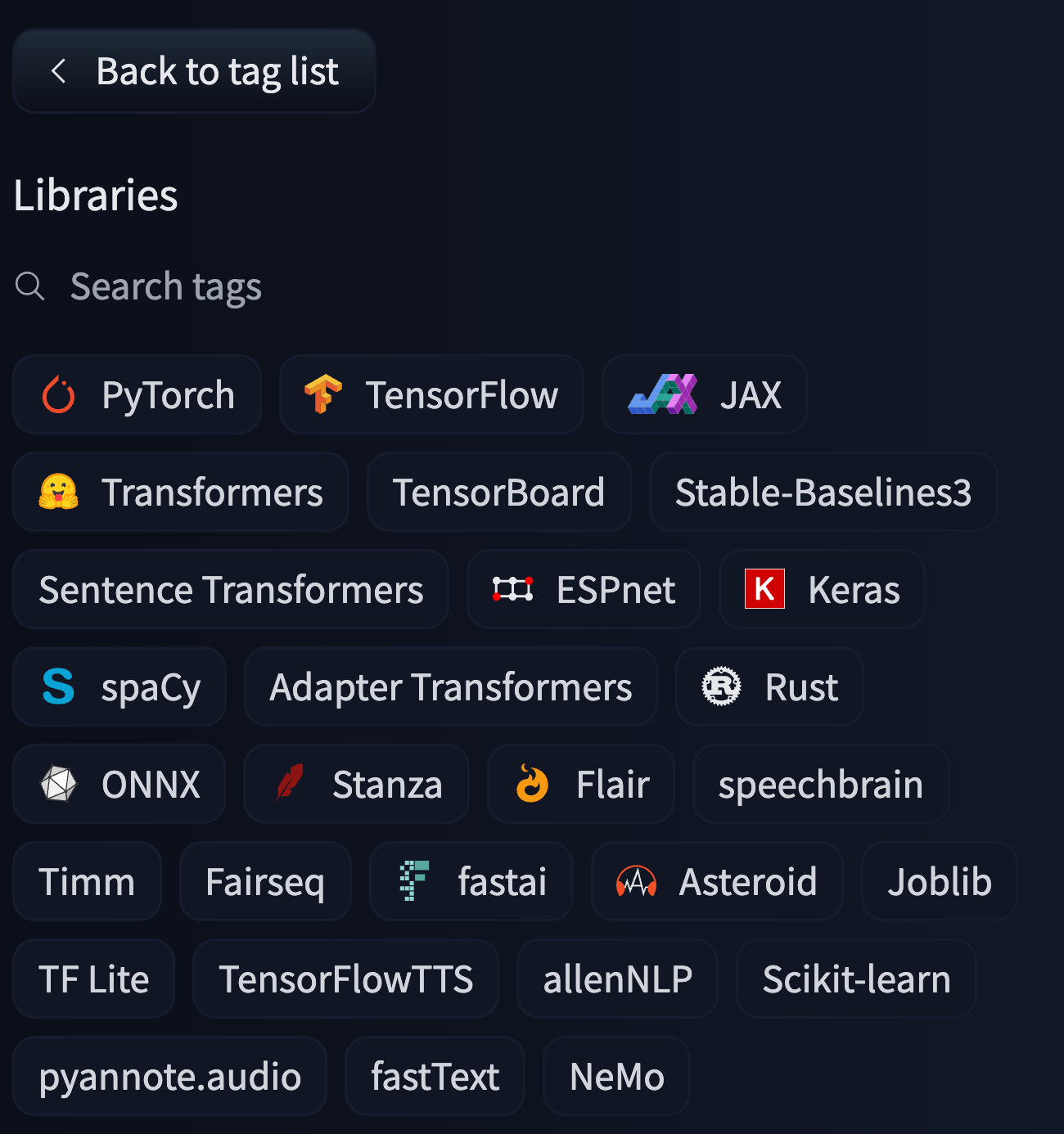

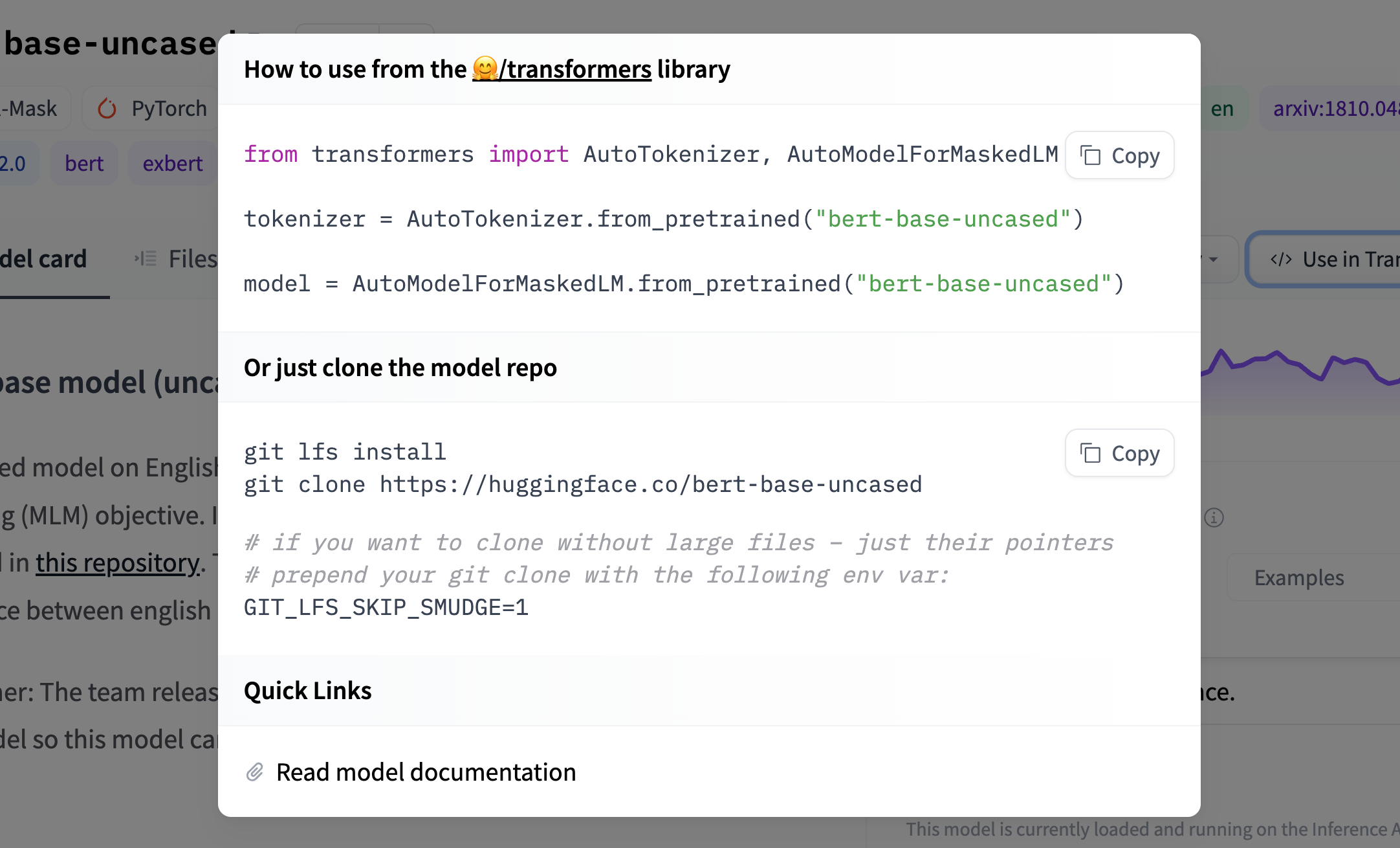

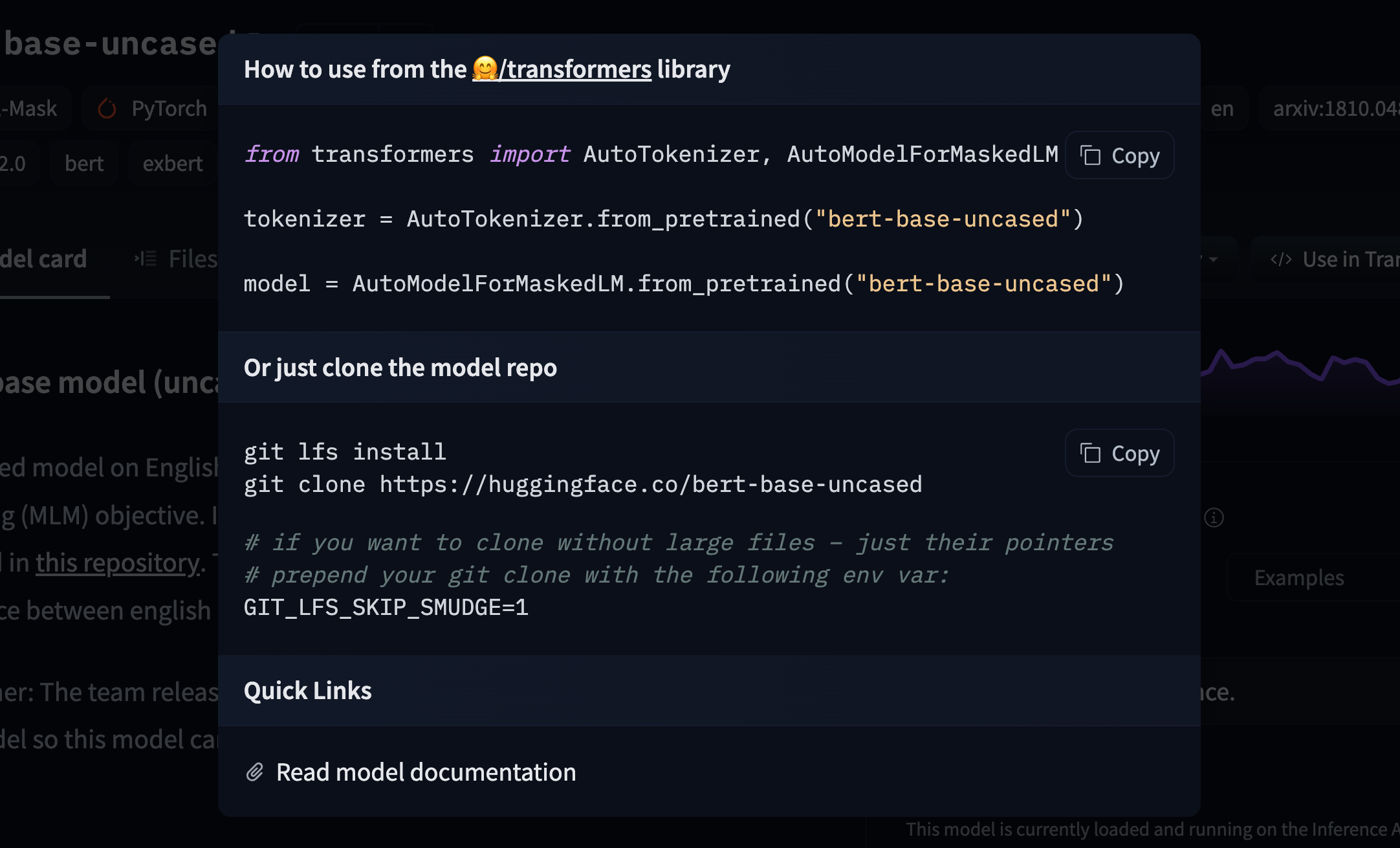

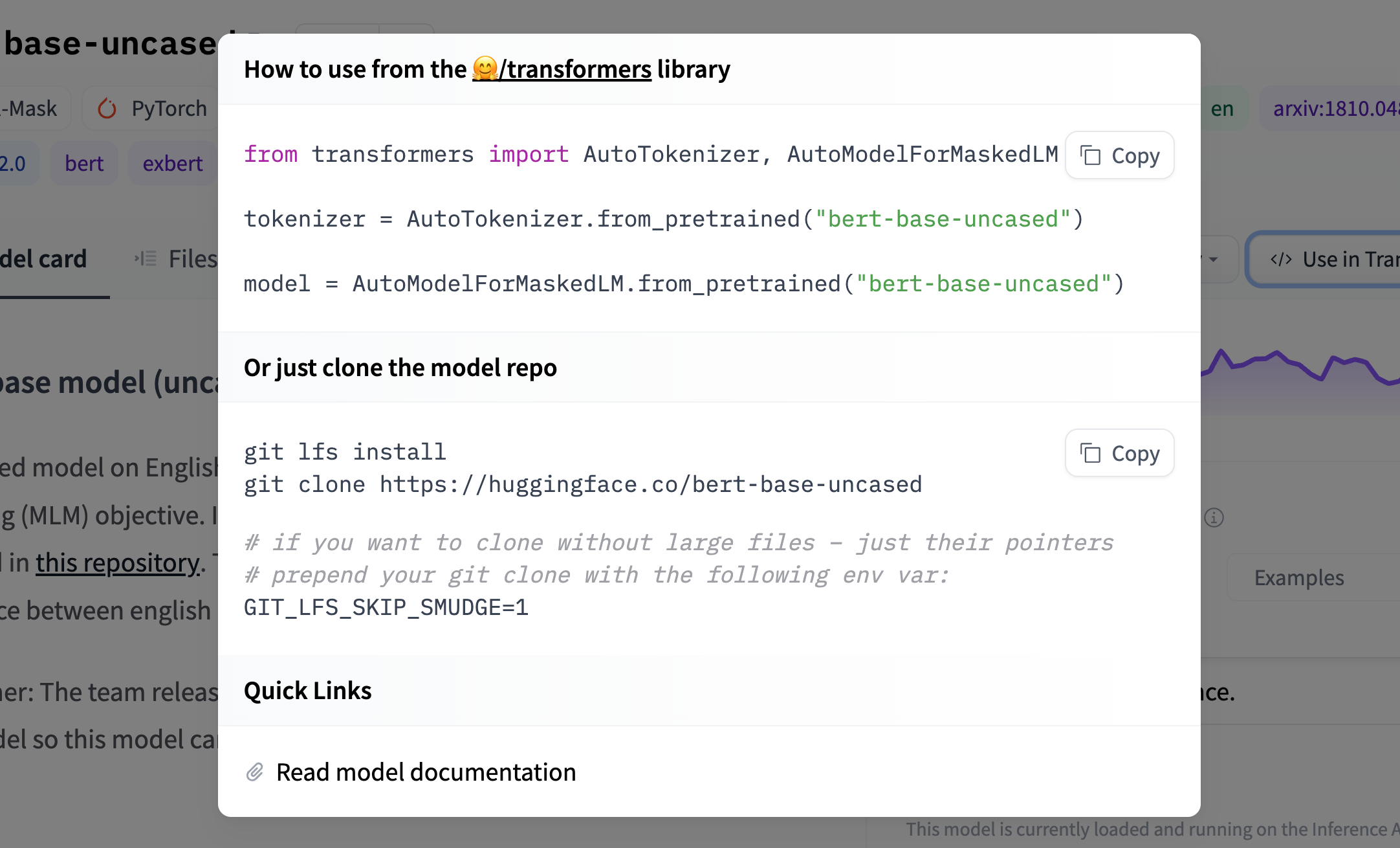

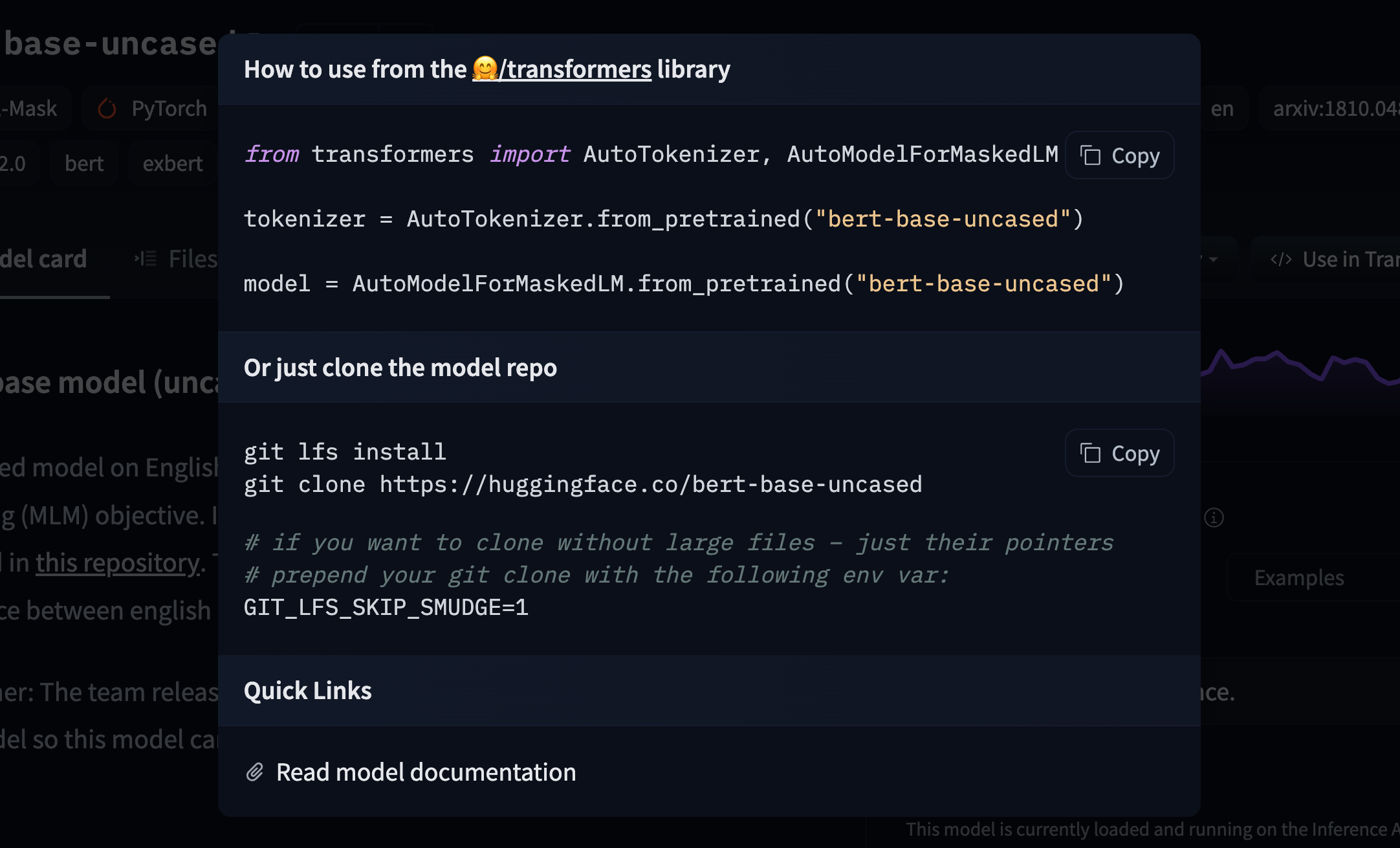

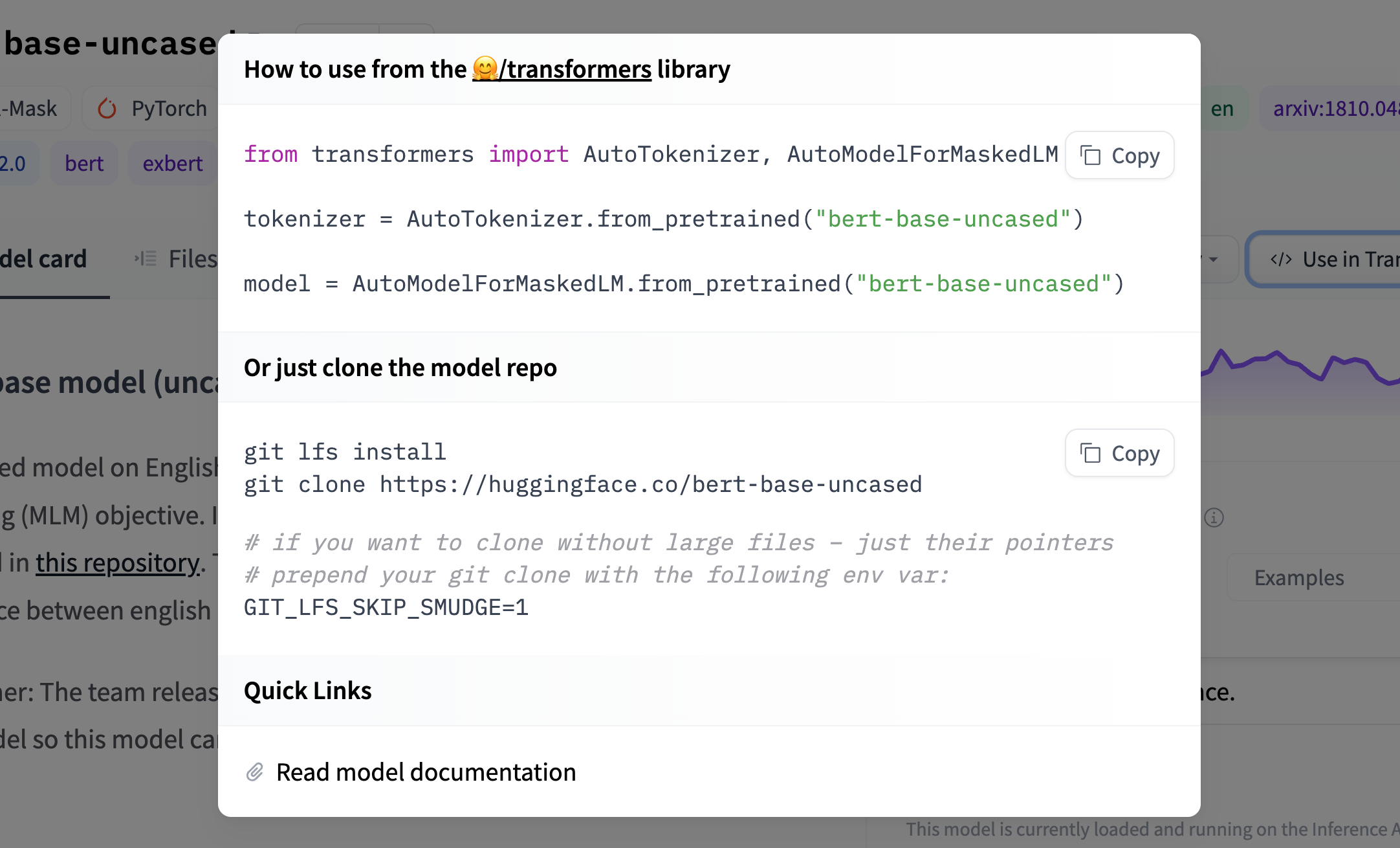

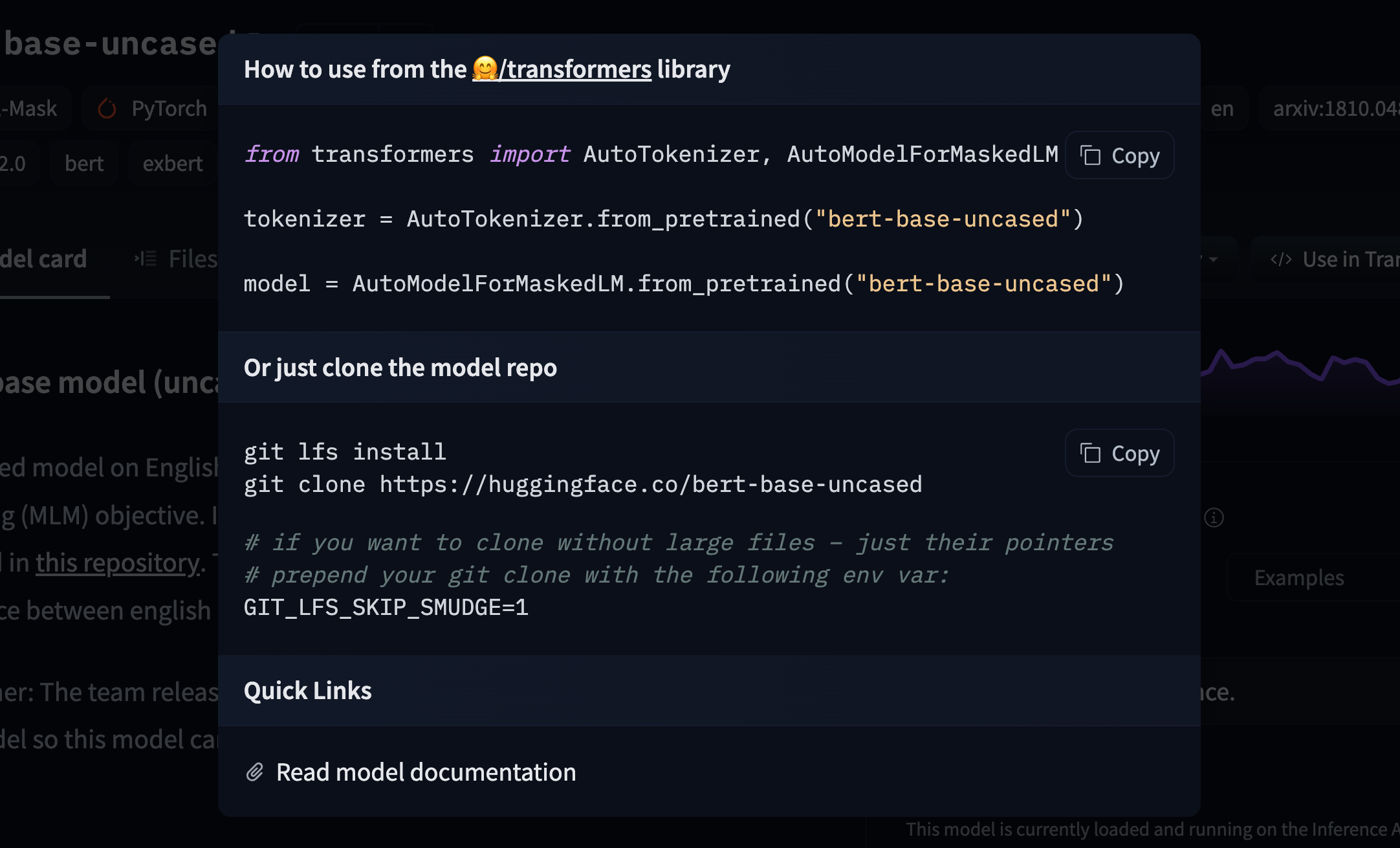

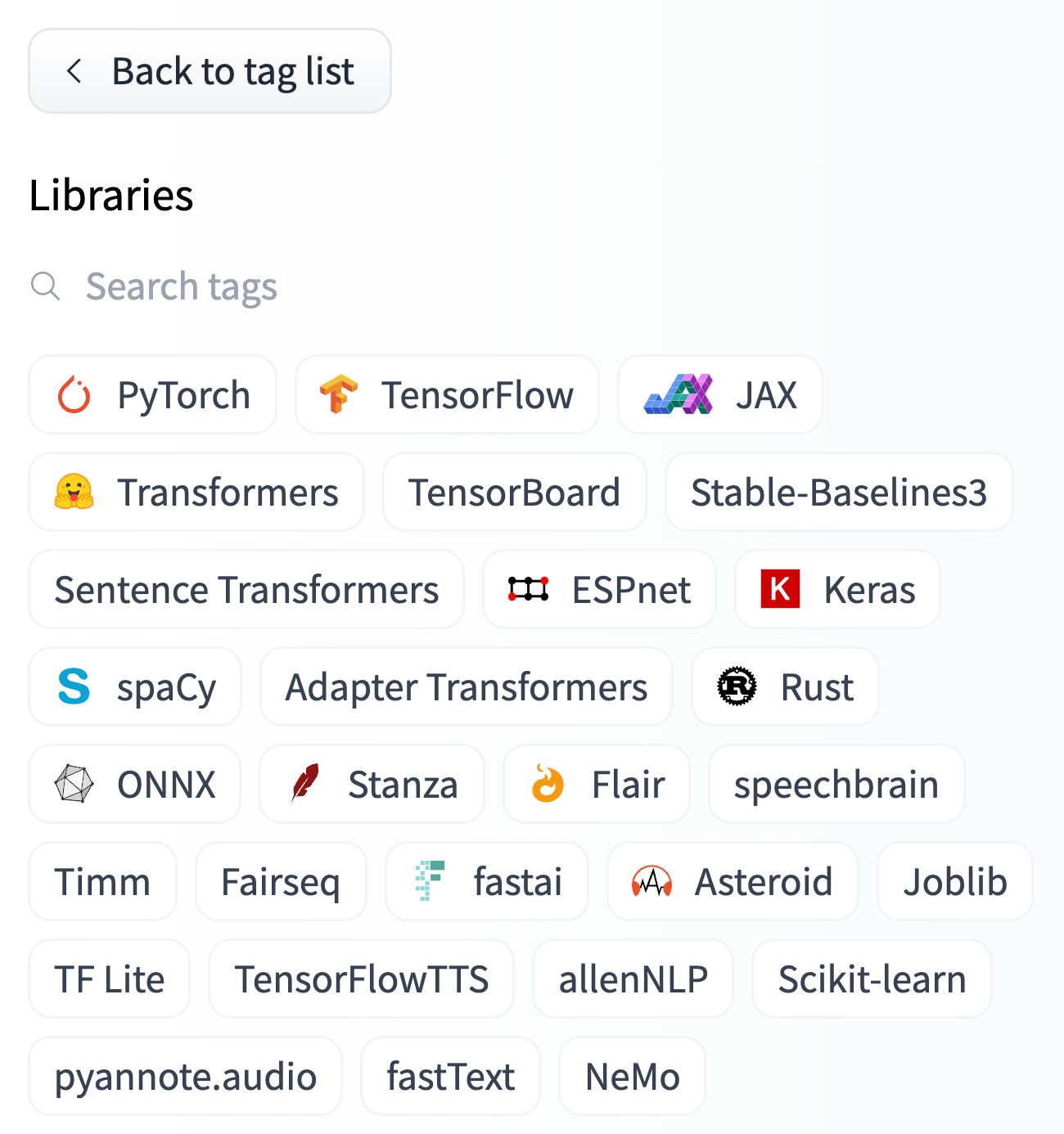

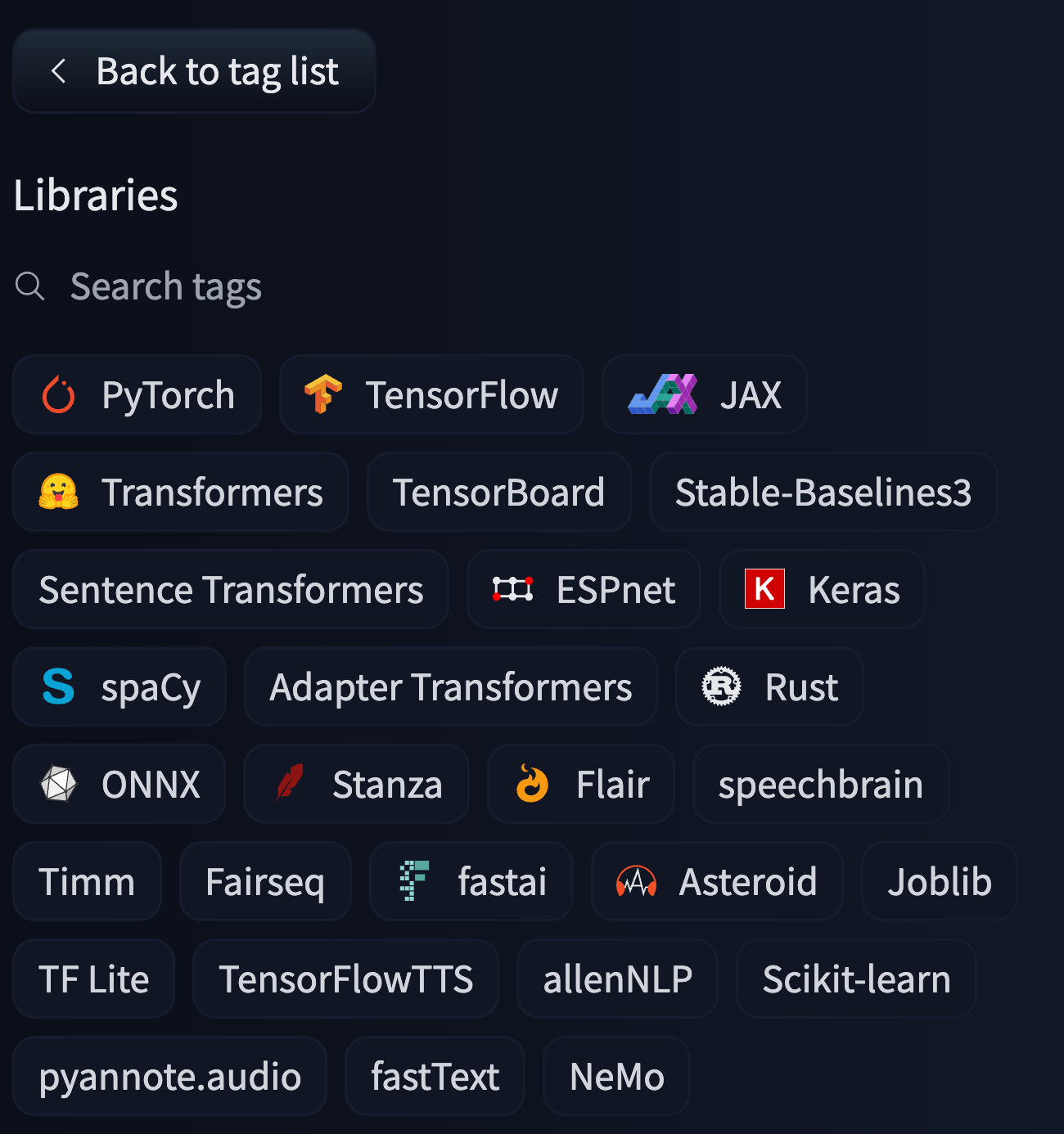

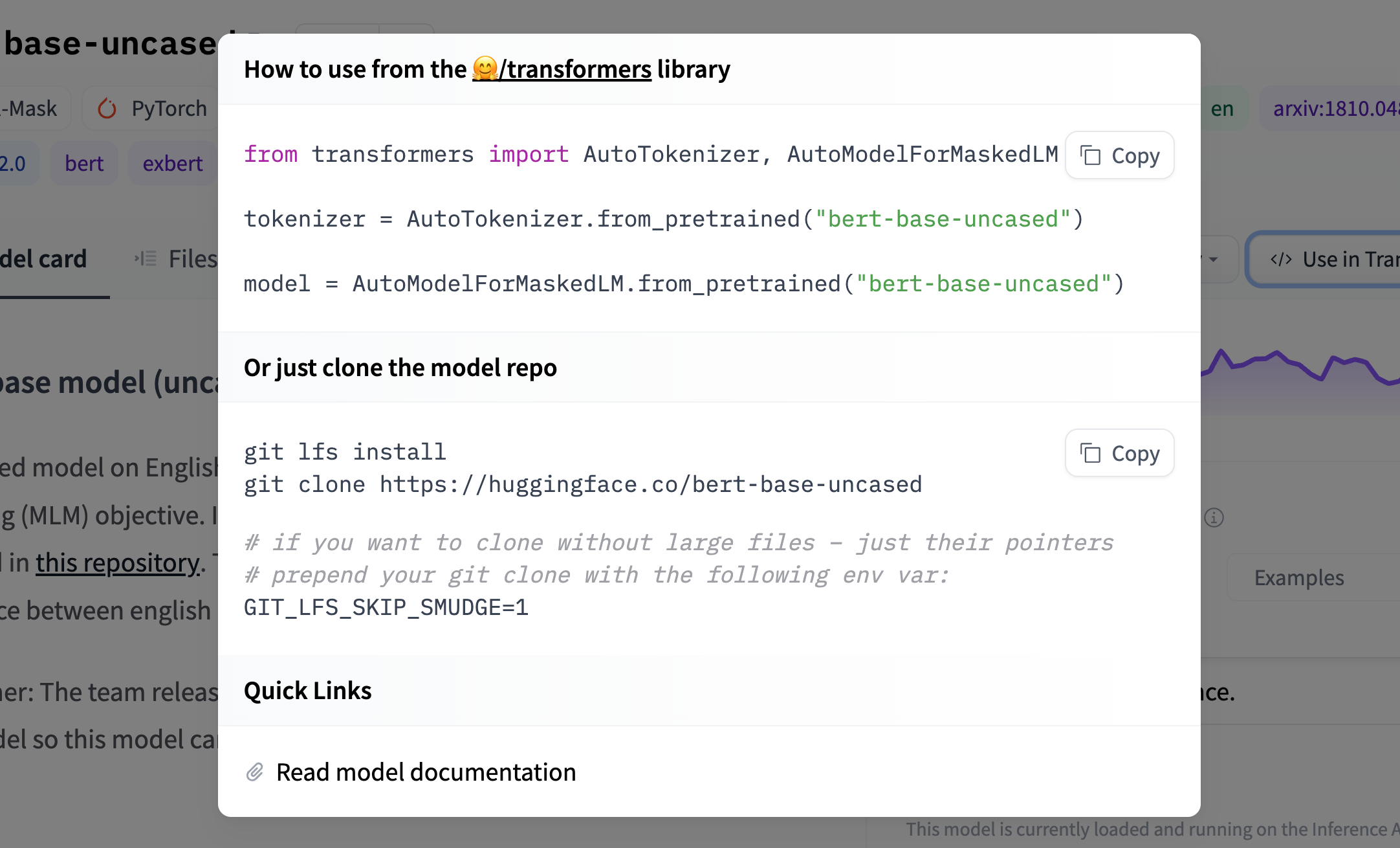

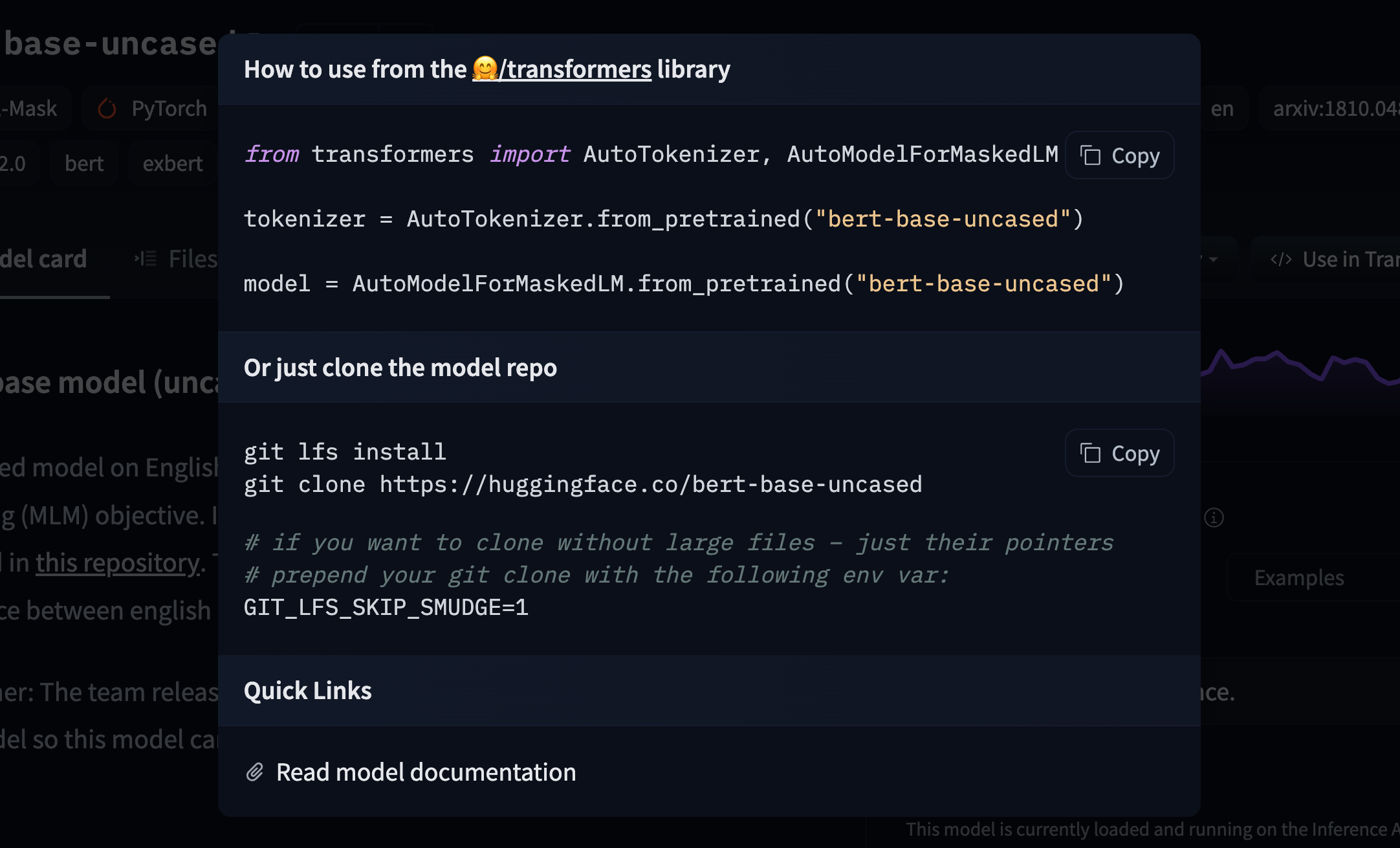

-We recommend adding a code snippet to explain how to use a model in your downstream library.

-

-

-

-

-

-

-

-

+

+

+

-

- -

- -

- -

- -

- -

- +

+ +

+