@@ -53,6 +53,31 @@ Llama.cpp has a helper script, [`scripts/hf.sh`](https://github.com/ggerganov/ll

Find more information [here](https://github.com/ggerganov/llama.cpp/pull/5501).

+## Usage with GPT4All

+

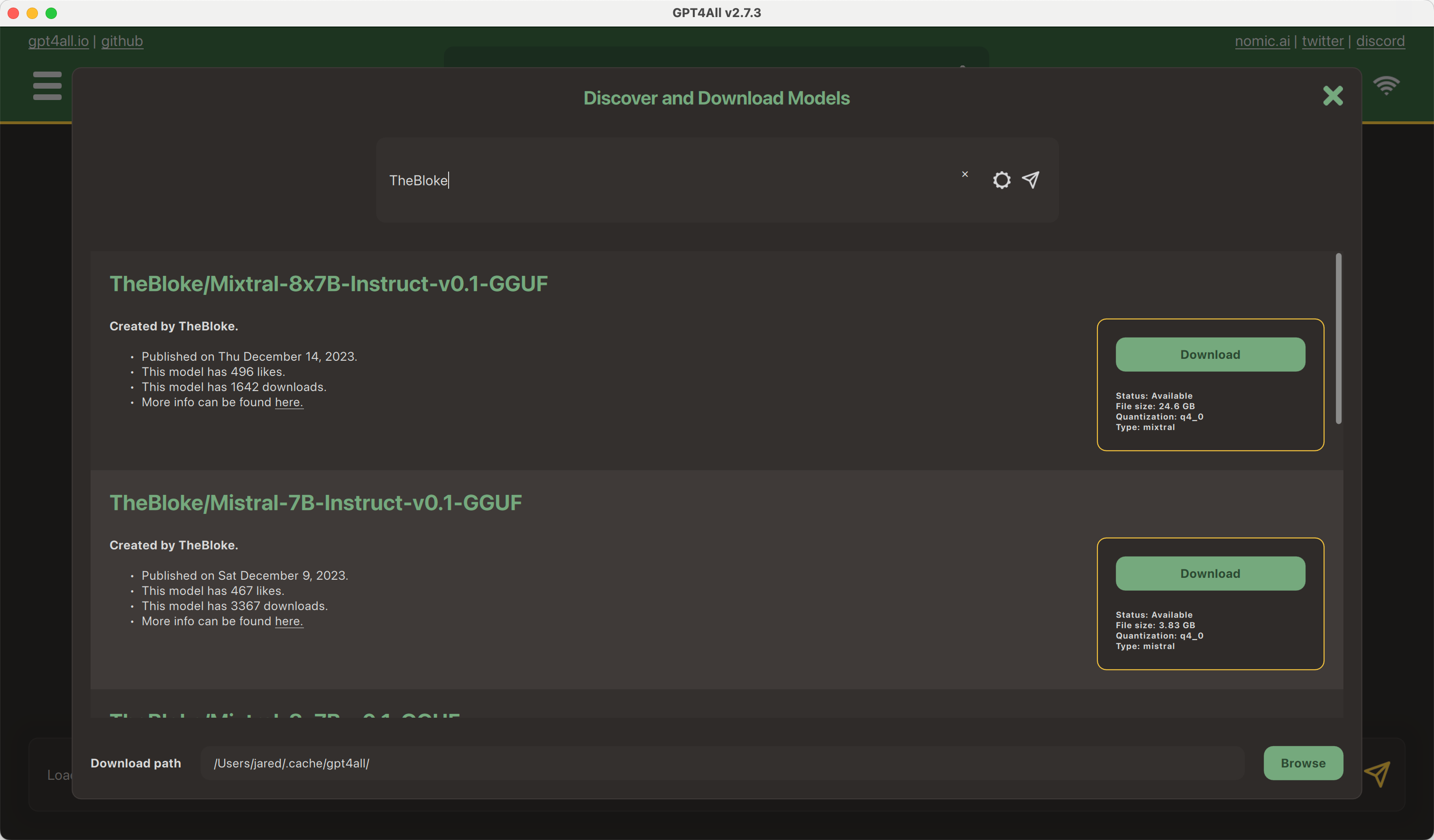

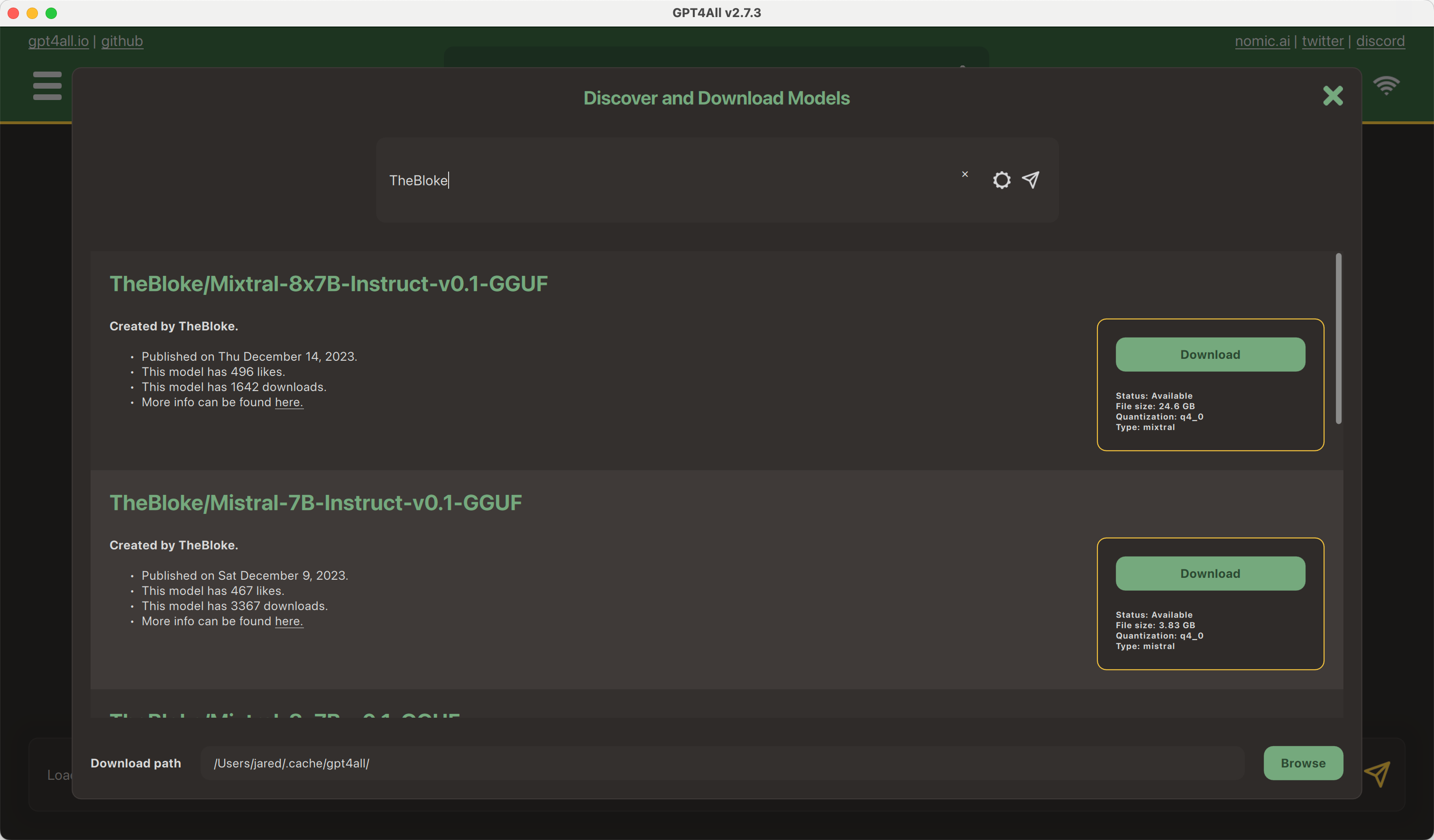

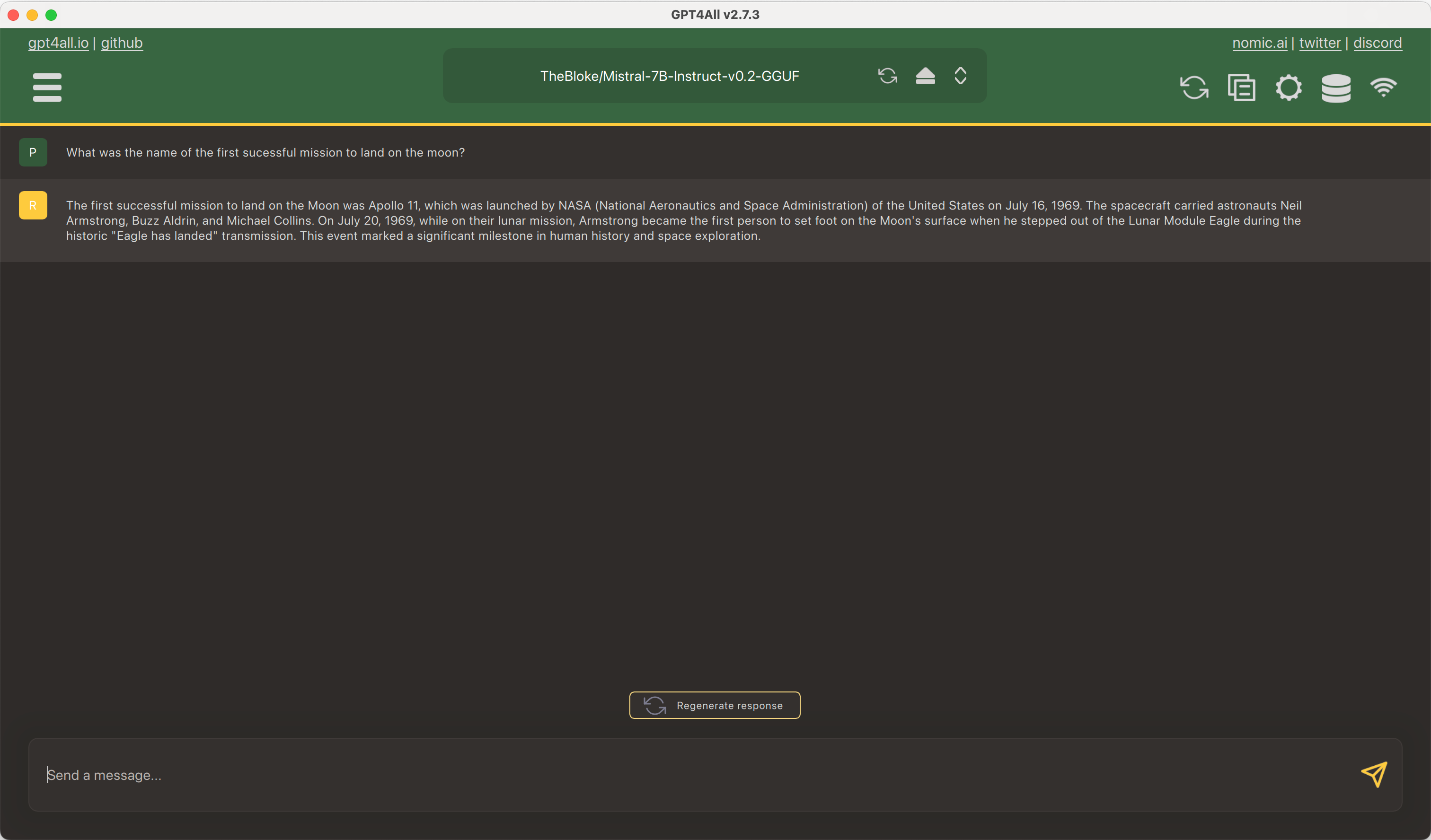

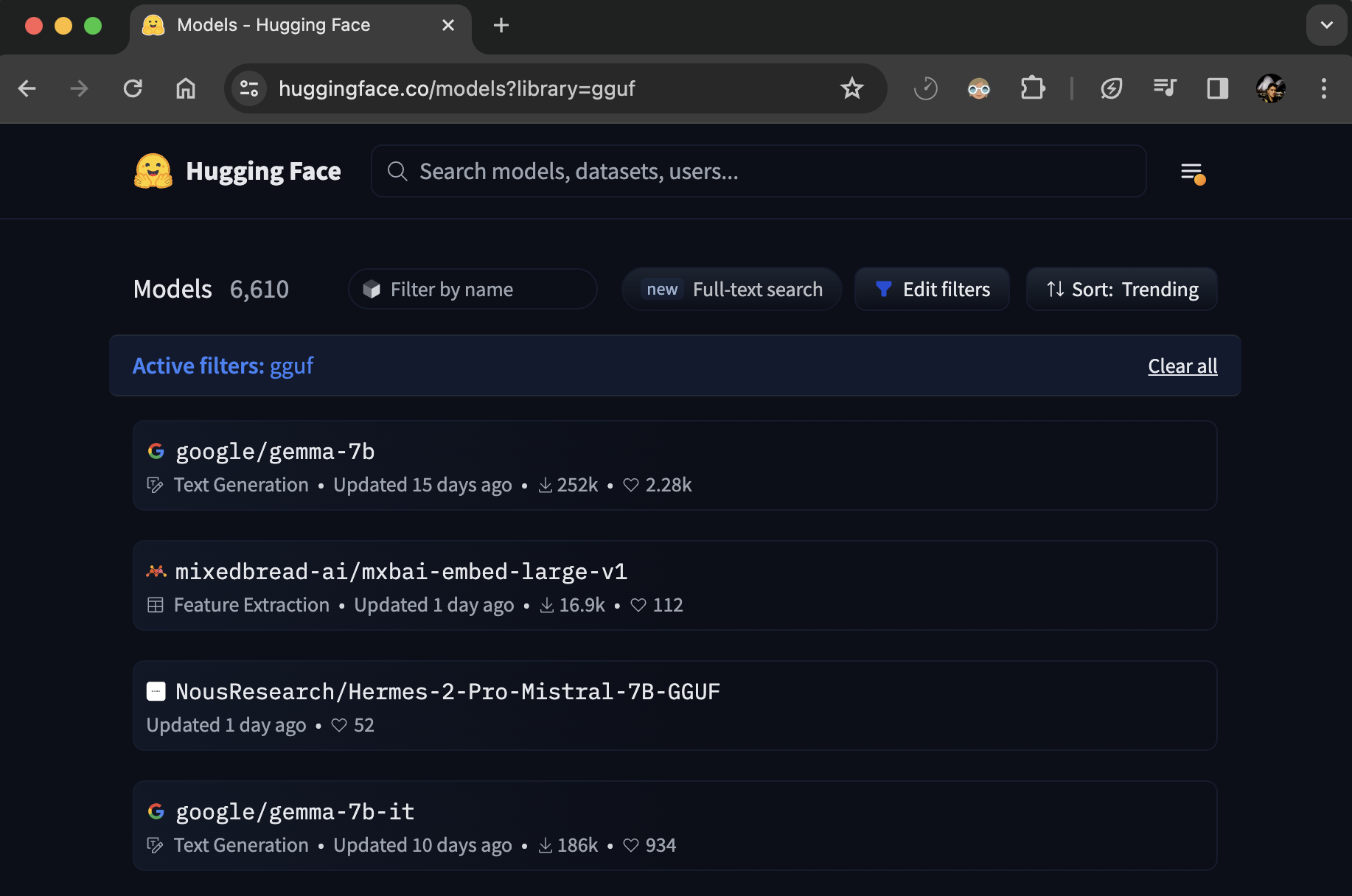

+[GPT4All](https://gpt4all.io/) is an open-source LLM application developed by [Nomic](https://nomic.ai/). Version 2.7.2 introduces a brand new, experimental feature called `Model Discovery`.

+

+`Model Discovery` provides a built-in way to search for and download GGUF models from the Hub. To get started, open GPT4All and click `Download Models`. From here, you can use the search bar to find a model.

+

+

@@ -53,6 +53,31 @@ Llama.cpp has a helper script, [`scripts/hf.sh`](https://github.com/ggerganov/ll

Find more information [here](https://github.com/ggerganov/llama.cpp/pull/5501).

+## Usage with GPT4All

+

+[GPT4All](https://gpt4all.io/) is an open-source LLM application developed by [Nomic](https://nomic.ai/). Version 2.7.2 introduces a brand new, experimental feature called `Model Discovery`.

+

+`Model Discovery` provides a built-in way to search for and download GGUF models from the Hub. To get started, open GPT4All and click `Download Models`. From here, you can use the search bar to find a model.

+

+

+ +

+ +

+

+

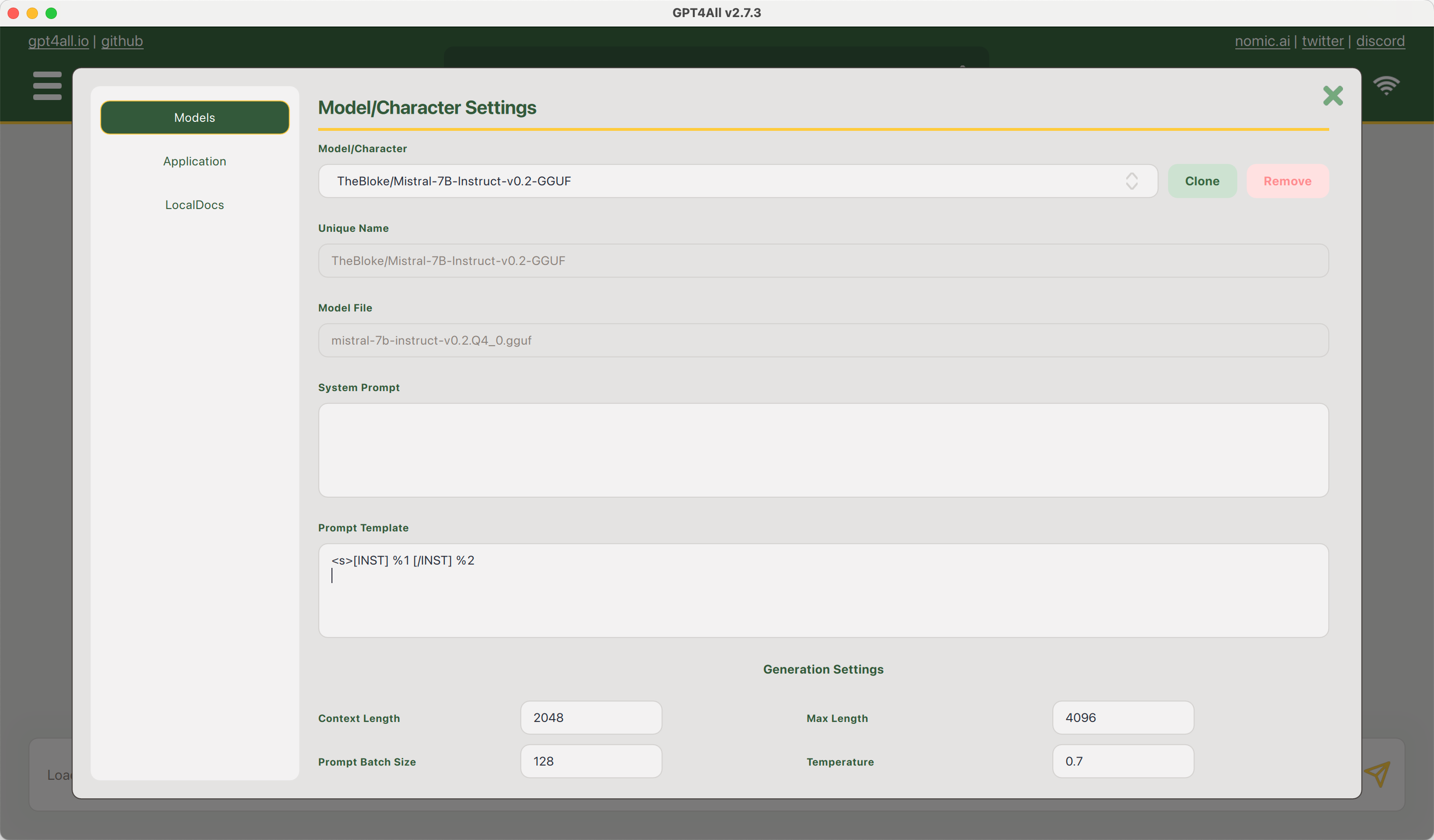

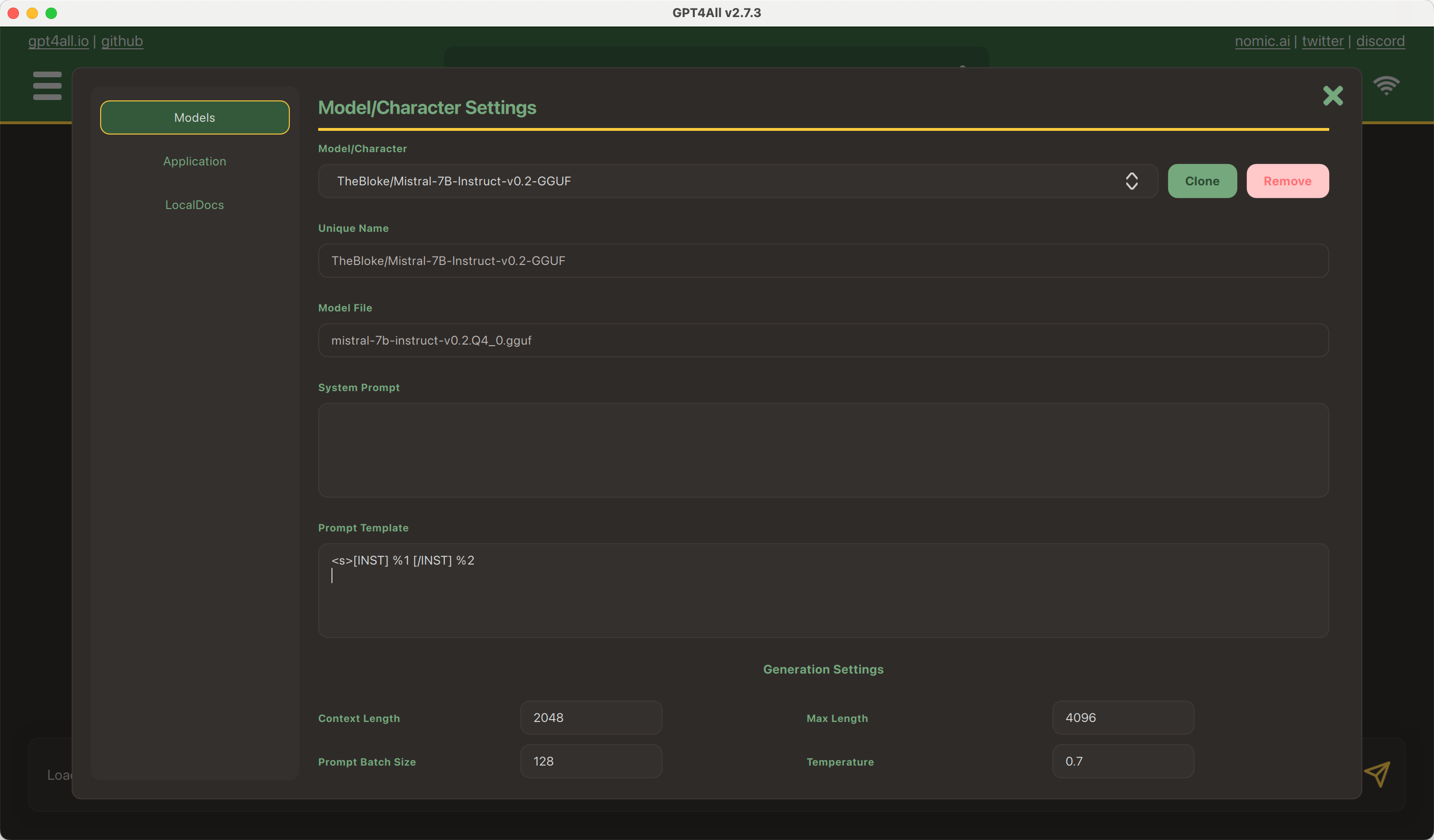

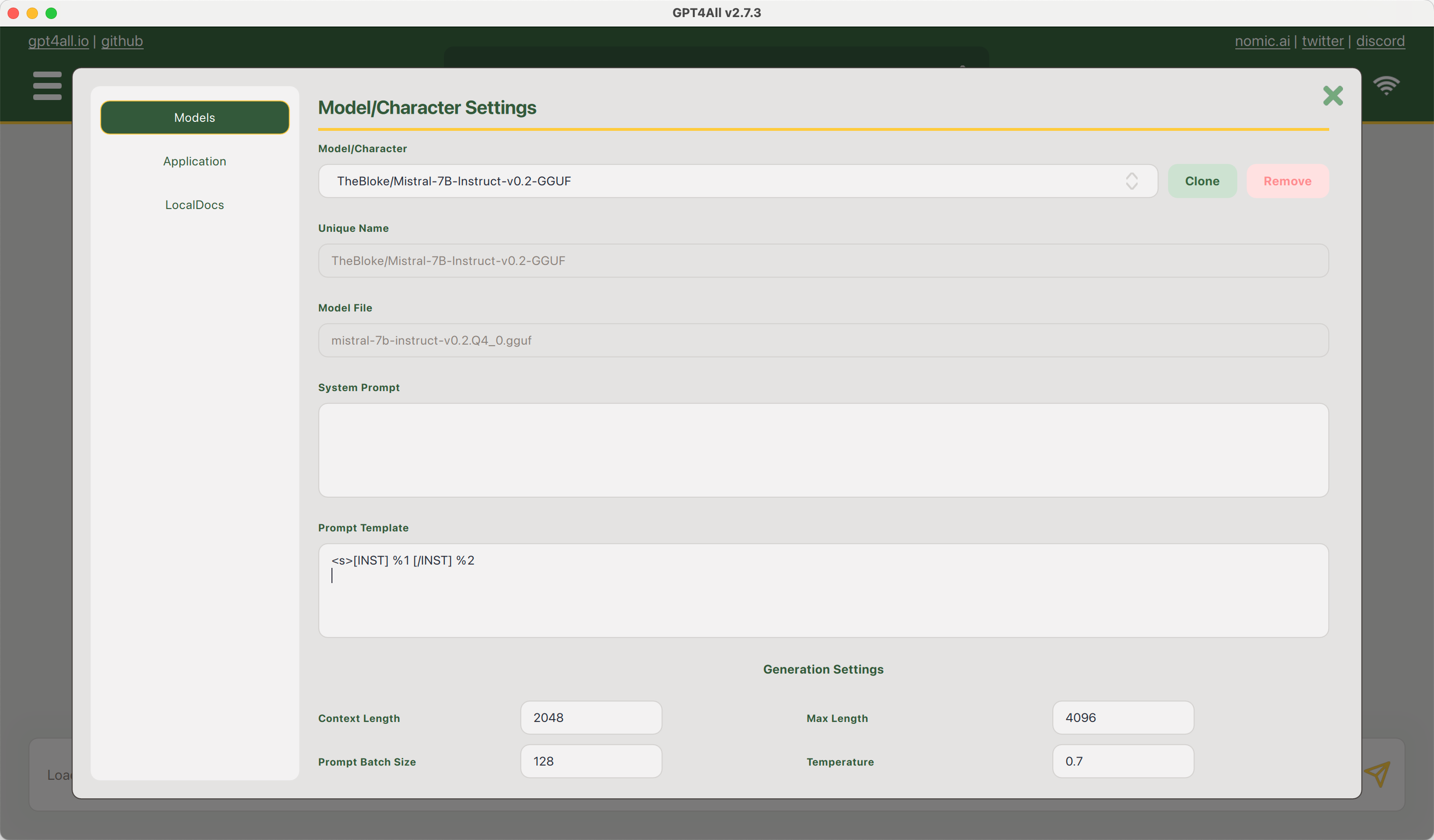

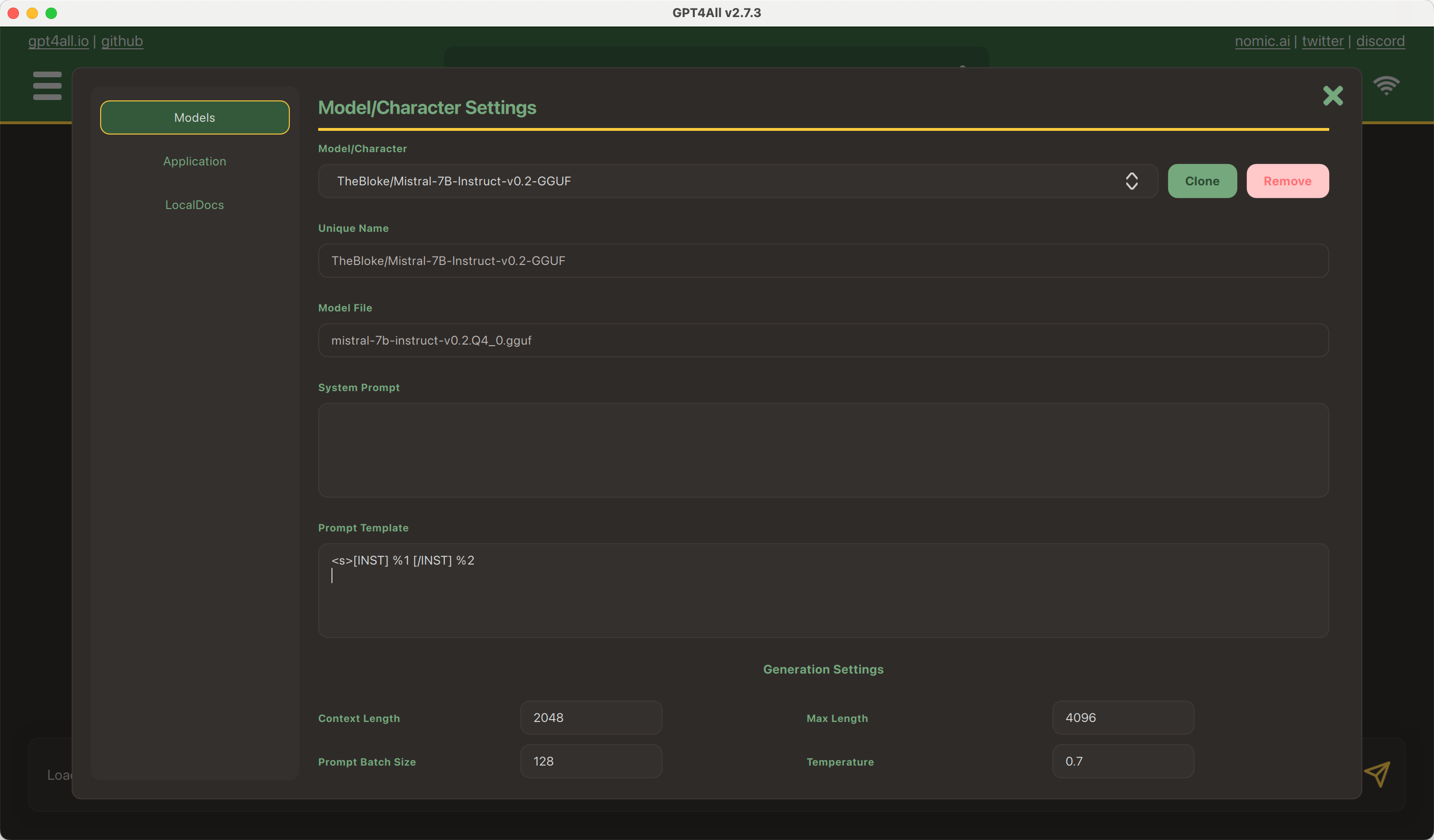

+After you have selected and downloaded a model, you can go to `Settings` and provide an appropriate prompt template in the GPT4All format (`%1` and `%2` placeholders).

+

+ +

+ +

+

+ +

+ +

+

+

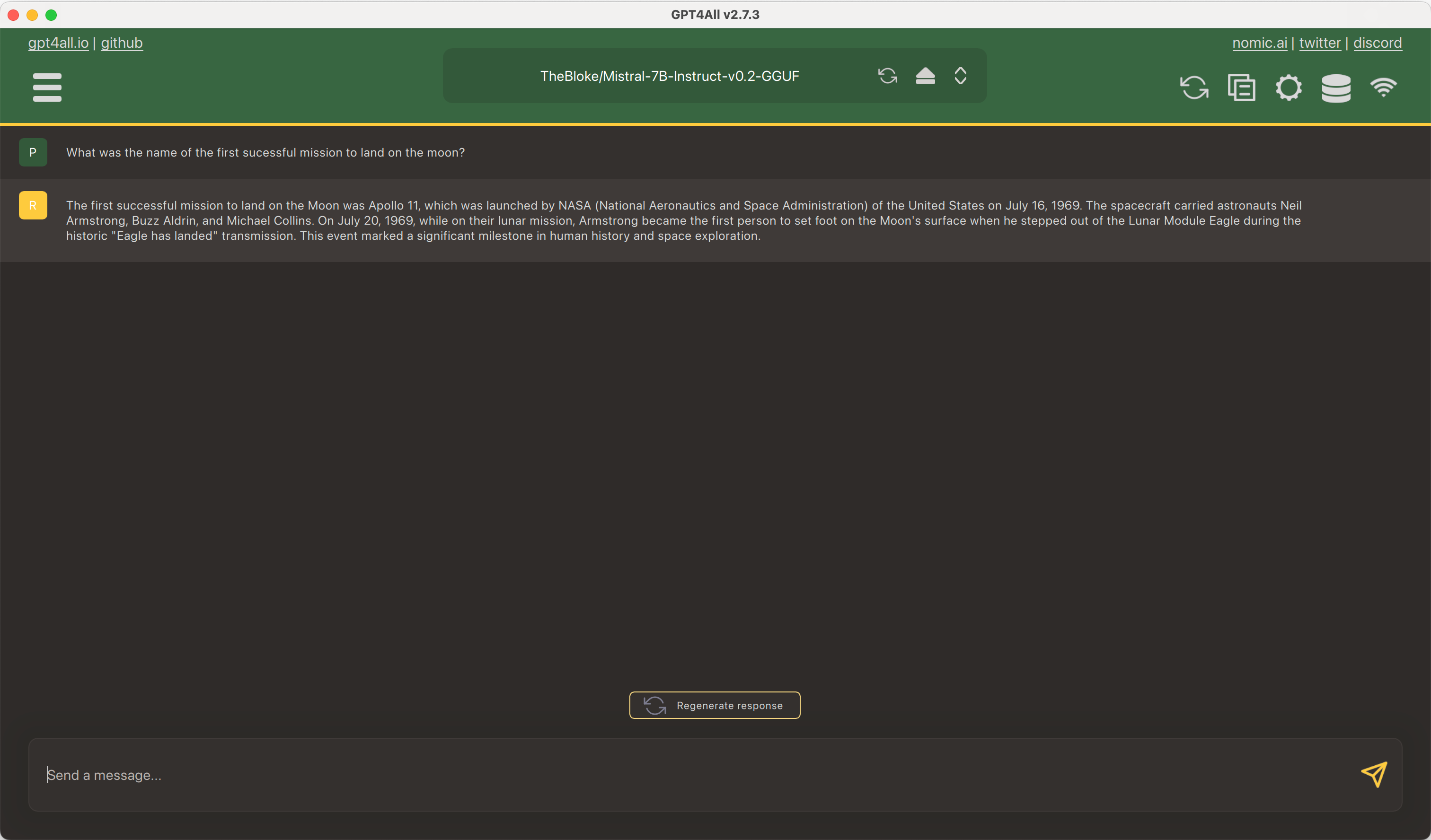

+Then from the main page, you can select the model from the list of installed models and start a conversation.

+

+ +

+ +

+

+ +

+ +

+

+

## Parsing the metadata with @huggingface/gguf

We've also created a javascript GGUF parser that works on remotely hosted files (e.g. Hugging Face Hub).

@@ -68,4 +93,4 @@ const URL_LLAMA = "https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGUF/resolve/

const { metadata, tensorInfos } = await gguf(URL_LLAMA);

```

-Find more information [here](https://github.com/huggingface/huggingface.js/tree/main/packages/gguf).

\ No newline at end of file

+Find more information [here](https://github.com/huggingface/huggingface.js/tree/main/packages/gguf).

+

+ +

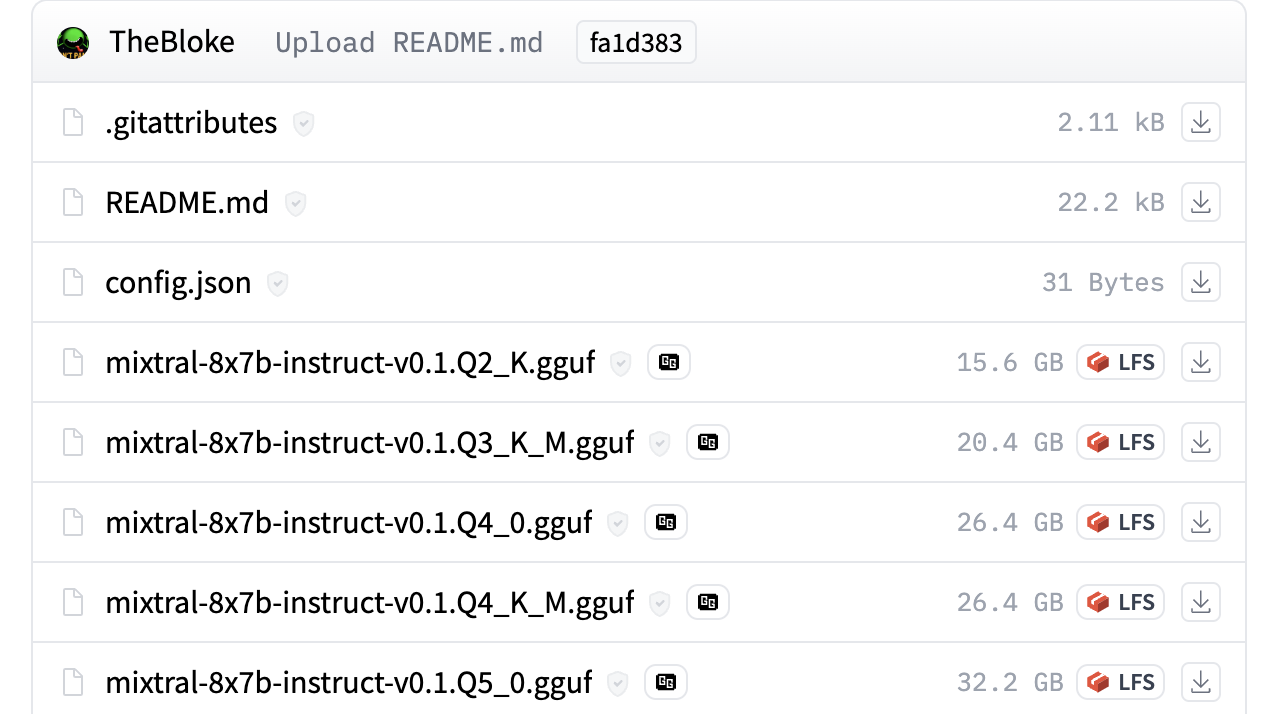

+ -For example, you can check out [TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF](https://huggingface.co/TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF) for seeing GGUF files in action.

+For example, you can check out [TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF](https://huggingface.co/TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF) for seeing GGUF files in action.

-For example, you can check out [TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF](https://huggingface.co/TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF) for seeing GGUF files in action.

+For example, you can check out [TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF](https://huggingface.co/TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF) for seeing GGUF files in action.